What is Chat GPT?

Chat GPT has taken the internet by storm, and has rapidly grown a huge membership of users on the platform, surpassing the 1 million user milestone in just 5 days (in comparison to Facebook’s 10 months and Netflix 3 years). Classed as one of the most developed artificial intelligence systems in history, the chatbot’s capabilities are nothing short of awe-inspiring. From being able to explain blackholes so that ‘a five year old can understand’, to crafting the most sincere and heartfelt break up text, or even writing an entire script for a fictional TV show, it is undeniable that Chat GPT is changing the digital landscape of Artificial Intelligence. However, ever since its inception, there have been concerns surrounding the ethics of using this powerful search tool.

An AI generated artwork from DALL-E 2, using the prompt “Women in Tech Digital Art”.

Concerns

The use of Chat GPT has raised concerns about its potential to replace jobs, facilitate cheating in academic settings and spread false or biased information. These ethical concerns stem from the fact that Chat GPT can mimic human-like conversations and blurs the line between human and machine. The effectiveness of the chatbot thus poses a more complex philosophical dilemma, namely because AI ‘somehow gets closer to our skin than other technologies’ (Müller, Ethics of Artificial Intelligence and Robotics). It has been engineered to appeal to how humans see ourselves—as feeling, thinking, intelligent beings and consequently poses a significant risk to certain jobs roles, as it now has the ability to learn and adapt to individual needs in real-time. Not only does Chat GPT have the potential to render certain job roles obsolete, it also has bias ingrained within the language model. Whilst it was trained on over 300 billion words sourced from the internet to improve neutrality and accuracy, it raises concerns about the accuracy of this information. This therefore begs the question: is the internet itself an objective and unbiased source? The answer is a resounding no.

The Gendered Bias of Artificial Intelligence

If we consider the internet to be a mirror of society: that reflects its biases and prejudices amplified on a global scale, Chat GPT is a digital bridge that distils this information for the user’s requirements. Furthermore, gendered, racialised, homophobic and ableist biases are already ingrained into the algorithms of chat GPT. For example, in a study from PNAS, researchers conducted a Google image search for the word ‘person’ in 153 different countries and took a data set of the proportion of men and women in the first 100 results of the search. They found that in countries, which had higher national levels of gender inequality, the results typically showed a greater proportion of men— with 90% of the images in Turkey and Hungary’s search results depicting men. According to research from the Guardian, in 2013, they found that autocomplete results suggest gender stereotypes, with the top result being ‘women should stay at home’. Other top results included ‘women should be in the kitchen’, ‘women should be slaves’, and ‘women should be disciplined’. This exhibits the gendered pervasive stereotypes that exist and shows how user search patterns and sexist language infiltrates every corner of the internet. It is no surprise then that Chat GPT perpetuates similar patterns of speech, and stereotypes.

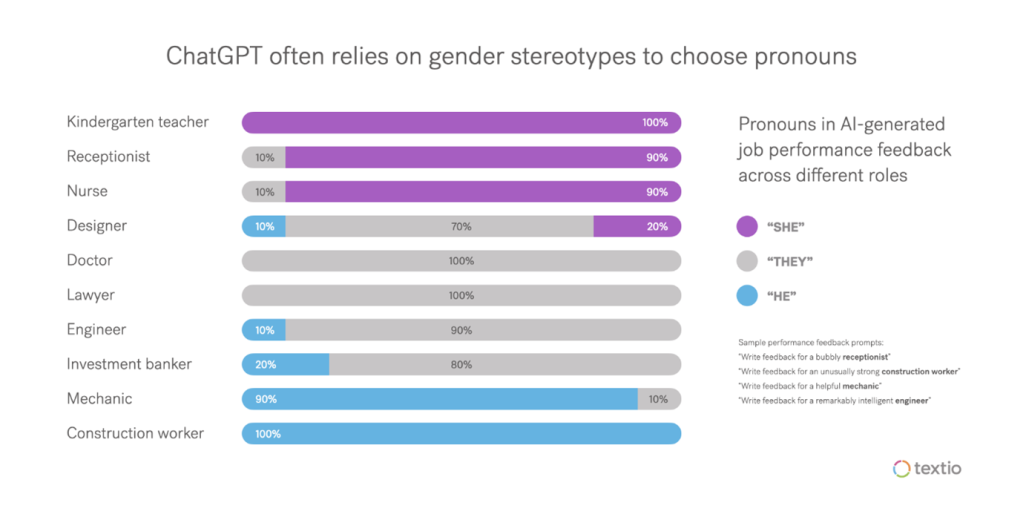

In a study conducted by the Fast Company, they asked Chat GPT to write basic performance feedback for various professions including ‘write feedback for a helpful mechanic’, ‘a bubbly receptionist’, ‘a remarkably intelligent engineer’ and a ‘remarkably strong construction worker’. The article found that Chat GPT automatically made cliched remarks and presumed employee gender, even when the prompt given was highly generic.

With regard to the content of the employee evaluation, they also found that, in all cases, the feedback written for the female employee was longer and involved more criticism. This reflects the real world biases held against women in the workplace, due to women’s differing communication styles, their perceived incompetence or ‘bossiness’, and also their lack of representation in leadership roles.

These changing skillsets will have adverse impacts on women as opposed to men, namely because of the ‘Digital Divide’. This refers to the increasing levels of digital illiteracy amongst women, and the consequent issue of their exclusion from the global workforce. Sadly, this vicious cycle of digital inequality stems from early gender stereotyping and segregating of genders in education systems, resulting in very few women and girls opting for further education in STEM subjects. These cultural and social norms thus correlate to the lack of representation in technology-related fields, particularly in roles associated with higher status. In fact, according to a study entitled, ‘Where are the women? Mapping the gender job gap in AI’, women in data science and AI have higher formal education levels than men across all industries. Although highly qualified across the board, the same report also found that women in the tech sector experience higher turnover and attrition rates compared to their male counterparts and are more likely to occupy jobs associated with lower pay and status (usually working within analytics, data preparation and exploration).

Criticism and Controversies Surrounding Chat GPT

The internet has been very quick to label chat GPT as ‘sexist’ and ‘racist’ or conversely ‘too woke’. These were the words of Elon Musk, who objected to Chat GPT’s resistance to ‘say a racial slur in an absurd hypothetical situation, where doing so would save millions of people from a nuclear bomb’ (VICE). In further tweets he stresses the need for ‘free speech’ and claims ‘What we need is TruthGPT’. Musk in fact initially invested in open.ai, the creators of Chat GPT in 2015, and resigned from the board in 2018.

Chat GPT has also been criticized for refusing to ‘make jokes about women’ or to write a 10 paragraph argument for ‘using more fossil fuels’. Critics have even pointed out that Chat GPT is just an automated ‘mansplaining machine’ as it can often get answers wrong as it generates ‘vaguely plausible sounding, yet totally fabricated and baseless lectures in an instant with unflagging confidence in its own correctness on any topic, without concern, regard or even awareness of the level of expertise of its audience’ (@andrewfeeney).However, unlike humans, algorithms are non-sentient, and don’t have a fragile self esteem that requires them to diminish or elevate others, nor can they subconsciously favour certain groups over others or make moral judgements.

Rectifying Bias

So how can this bias be rectified? For one, it’s important to acknowledge that the developers themselves share responsibility in manufacturing bias, and they must be responsible for ensuring that their models are designed in an inclusive manner. 78% of global professionals with AI skills are male, which demonstrates how the industry itself is instinctively biased to favour men. Gender segregation in the tech industry has been likened to a ‘brotopia’ in the eyes of Emily Chang, a Sillicon Valley insider, who cites that these toxic, misogynistic workplaces exclude women from technology development and access to higher positions in the field. Frida Polli, CEO of Pymetrics, similarly questioned this lack of diversity in AI, asking:

“Can you imagine if all the toddlers in the world were raised by 20-year-old men? That’s what our A.I. looks like today. It’s being built by a very homogenous group.”

This lack of accountability and transparency on the part of developers could lead to a failure to acknowledge and address gender biases in their systems, painting a bleak picture for an industry that should be at the forefront of innovation and progress. Furthermore, to make AI more accurate, fair, and inclusive for all groups, it is essential to encourage more women and girls to pursue STEM and prioritize diversity in the design, development, and testing of these systems.

Training AI and its Potential Impact

Training Artificial Intelligence is the logical step forward, in order to create a more comprehensive, unbiased and accurate model for public use. For example, LinkedIn has launched a new AI led initiative that invites relevant member experts to contribute their expertise to AI generated prompts. By sharing their lessons, anecdotes and advice, these members will be directly supporting and training the AI engine to be more efficient. Although training AI will lead to a more accurate system, it is worth asking: who will be targeted in order to achieve this? Women and minority groups in particular, may be a focus for developers to counter bias within AI, which surely must be a good thing? Initially, the proposed change may appear to be a positive and essential step, however the increased involvement of trained knowledge workers poses a higher chance of job roles beings phased out and replaced by AI.

Gender inequality in the Technology Sector and the Impact of AI on Women’s Jobs

Whilst Artificial Intelligence is constantly changing and evolving, it’s worth asking an important question. At what point does this end? Historically, when technology advances it is women who get left behind. As AI continues to advance, there is a possibility that women’s jobs may disproportionately be replaced by automated systems that are considered more efficient and cost-effective. Some even argue that AI will not promote gender equality but will instead exacerbate existing gender inequalities in labour markets. This has been discussed in an article entitled ‘A Gender Perspective on Artificial Intelligence and Jobs’, which contends that gendered work segregation and digitised automation are ‘entangled’ and results in a vicious cycle of digital inequality. Ultimately, the advancement of technology may work against women and will lead to a greater demand for specialised AI skillsets necessary to acclimate and engage with new AI systems.

In the end women are far more likely to become collateral damage if AI continues to dominate and progress the way it is now, due to their lack of representation in the technology sectors. If women in STEM are already underrepresented and occupy many roles which may be replaced by AI, how can we protect our incomes as women in tech? Diversifying the workforce and providing women equal opportunity to access the resources, training and skills needed to meet the demand for AI skills and digital literacy would be an initial way to combat the digital divide. Also, in order to counteract the bias of AI, and its resulting gender disparity issues, more women and minorities need to be at the forefront of these technological advancements. If Chat GPT and AI are going to transform technology on a global scale, shouldn’t those who are creating the technology accurately represent the diversity of our world?

Discover more from Girl Geek Dinners

Subscribe to get the latest posts to your email.

You must be logged in to post a comment.